Tsinghua Reconfigurable Computing Team proposes a new approach to Memory Optimization for AI Chips, significantly improving their energy efficiency

The 45th International Symposium on Computer Architecture (ISCA) was held in Los Angeles, California, from June 2nd to June 6th. Fengbin Tu, PhD student at the Institute of Microelectronics from Tsinghua University, gave an oral presentation titled “RANA: Towards Efficient Neural Acceleration with Refresh-Optimized Embedded DRAM” on June 4th. His approach to this work can significantly improve the energy efficiency of Artificial Intelligence (AI) Chips.

Fengbin Tu, PhD student at the Institute of Microelectronics, gave an oral presentation at ISCA 2018.

ISCA is the top conference for computer architecture. Altogether, 378 papers were submitted this year, and only 64 of them were accepted, an acceptance rate of 16.9%. Fengbin Tu et al.’s work is the only paper first-authored by a Chinese research team (the Tsinghua Reconfigurable Computing Team) in ISCA 2018. Shouyi Yin, Associate Professor at the Institute of Microelectronics, is the corresponding author, and Fengbin Tu is the first author. The paper is also co-authored by Prof. Shaojun Wei, Prof. Leibo Liu and Weiwei Wu (Master’s student) at the Institute of Microelectronics, Tsinghua University.

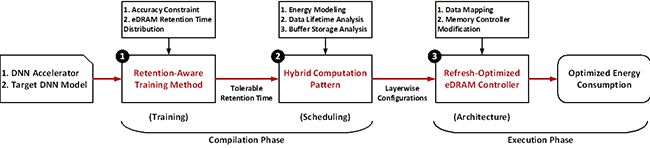

The growing size of deep neural networks (DNNs) requires large amounts of on-chip storage. In many DNN accelerators, their limited on-chip memory capacity causes massive off-chip memory access and leads to very high system energy consumption. So memory optimization is an important problem in AI chip design. Tsinghua Reconfigurable Computing Team proposed a Retention-Aware Neural Acceleration (RANA) framework for DNN accelerators to save total system energy consumption with refresh-optimized embedded DRAM (eDRAM). The RANA framework includes three levels of techniques: a retention-aware training method, a hybrid computation pattern and a refresh-optimized eDRAM controller. At the training level, DNN’s error resilience is exploited in training to improve eDRAM’s tolerable retention time. At the scheduling level, RANA assigns to each DNN layer a computation pattern that consumes the lowest energy. At the architecture level, a refresh-optimized eDRAM controller is proposed to alleviate unnecessary refresh operations. Owing to the RANA framework, 99.7% eDRAM refresh operations can be removed with negligible performance and accuracy loss. Compared with the conventional SRAM-based DNN accelerator, an eDRAM-based DNN accelerator strengthened by RANA can save 41.7% off-chip memory access and 66.2% system energy consumption, with the same area cost. The proposed framework significantly improves the energy efficiency of AI Chips.

RANA: Retention-aware neural acceleration framework.

The Tsinghua Reconfigurable Computing Team has designed a series of reconfigurable architecture based AI Chips (Thinker I, Thinker II, Thinker S) in recent years, which have attracted many researchers and engineers from all over the world. Their work was presented at ISCA 2018, and significantly improves the energy efficiency of AI Chips through memory optimization and software-hardware co-design techniques, which promotes a new way for AI Chips’ architectural development.

Editors: John Olbrich, Zhu Lvhe